An Observer Called H Bar

Wormhole spaghetti feeds the masses, and some tomatoes get thrown around

Little treat for the physics nerds today, or for people who worry about why the ꖾꗍꝆꝆ Planck’s ħ is not zero so we could’ve all stopped worrying about having the burden of free will. “Now why would he write that the ‘Observer is ħ’?” I hear you mumbling.1

An Observer Called Stuckey

There’s an interesting arXiv Paper I came across and a related youtube mini-series featuring Mark Stuckey.

Stuckey leans on information theoretic QM theory to try to explain the “EPR Paradox”.

First, let’s point out EPR is not a paradox, provided you drop one of the cherished principles,

No FTL signalling, or no retrocausality.

Locality, or local causality (no non-local causal events or correlations).

Determinism — of at least the time evolution of the spinors.

Reichenbach’s Probability Principle of common causes.

Statistical Independence — Alice and Bob can make “free will” choices of orientation for their spin/helicity measurements, or position/momentum if they can design that version of EPR.

QM is Complete — give this one up and you are saying, “We’re missing something chaps!” This was Einstein’s view. It is the correct view (that QM is incomplete).

Do physics nerds not read much science history? Guys! We are always missing something!

Maybe I missed a couple out, but those are the big six.

Up front I want to remind you how T4G theory resolves EPR.

In T4G the Bell entanglement is due to a wormhole bridge, the ER-bridge. This is minimal spacetime geometry, measurements easily collapse ER-bridges, but since vacuum entanglement is ubiquitous, the separated subsystems of the former Bell pair will always become entangled with other matter (particles in the instruments).

This means principle 1 is not quite right from a Minkowski frame of reference, since Minkowski topology cannot admit wormholes. But with wormholes then principle 1 is not violated.

Likewise for Principle 2: if you admit only Minkowski topology, then effects propagated across an ER-bridge will appear non-local, but in T4G all effects are still local and causal (within light cone), just not if you cannot admit the wormholes exist.

In T4G theory even Determinism is not truly lost, but we do have to give up on strict light cone time-evolution causality (which is not bad thing to abandon, unless you are a dumb-dumb materialist who really for some literally godforsaken reason doesn’t want free will), since there are nearly closed timelike curves in T4G — even though they may not be traversable, they still imply the spacetime cobordism cannot be determined by any past Cauchy horizon data. (This is an expression of Heisenberg’s Uncertainty Principle, essentially.)

I think T4G can retain Reichenbach and SI. But those principles are about probabilities, which are not truly physical. So we can take ‘em or leave ‘em. Personally, I like to think we do have some variety of “free will”. But, in any case, it is a pointless waste of time to debate SI. If it is true, why are we bothering to debate? Only because we cannot help it! If it is not true then let’s not bother to debate it! Ok, so let’s assume it is not true. If we assume wrongly then ꕷꖾꕯꖡ, we just could not help it.🤷♂️

You can now see why No. 6 is the correct thing to give-up, since our T4G view basically modifies all the other principles, hence is screaming at us that quantum mechanics is incomplete.

All this recapped, I was then intrigued about how Stuckey thinks he has a different resolution of the EPR Paradox. Let’s grant it is a paradox if local causality and all the rest are still valid physical principles. I find there is always something related to T4G in these newfound ways to avoid paradoxes.

A Tomato Taster

In the first video episode, Stuckey hints his resolution will be found in information theory. This is bad. Information is not a physical thing, it does not offer any physical explanation, it offers an accountancy tool.

This is a lot like the issue T4G has with spinors. Most people tend to think spinors are the fermions. They are not. The spinors are just transformation instructions. Information. Not substance, not spacetime geometry per se. So the spinors (wavefunctions) are not the particles. The spinors merely describe transformations of local frames of reference for our models of the particles.

But there was also a nice hint that Stuckey will get quite close to T4G, since he also mentions the key to the EPR paradox resolution is in superposition & entanglement — how they are two sides of a single coin. Very much also reminiscent of Jacob Barandes’ Indivisible Stochastic QM framework (ISQM). The trouble is, while ISQM relates entanglement to superposition (via indivisibility) it does not have a physical explanation.

I am going to guess Stuckey does not either.

I will guess Stuckey blabs on something about “information… qubits… blahblahblah” and never really provides an account for why only elementary particles can get entangled.

Since I love a good surprise, I hope my guess is wrong.2

What do you think after watching Stuckey’s first video ?

The Beauty of It

After the first video you have to watch the rest, since Stuckey’s idea is elegant to the point of beauty:

Superposition and entanglement are due to the frame independence of Planck’s constant ℏ.’

Hence, just as SR and then later GR were based upon the frame independence of the speed of light, Stuckey is saying QM is based upon the principle of the frame independence of ℏ. How awesome is that?

It is very cool.

I think it will also be “very T4G”. In fact, I think I will just absorb it entirely into T4G theory, thank you very much.

However, I do not think T4G needs Stuckey’s ℏ principle, since we can get it already, without making it an axiom.

Is it Really Non-constructive?

Stuckey reckons his “ℏ is constant” resolution to EPRB is like Einsteins’ resolution of length and time contraction given no Aether.

I had to write:

@2:10 what did Einstein you mean by “constructive efforts”? He only meant he could not derive c = constant from “known facts”. But that is false, he could have derived this from known facts, by recognizing the wave solutions in Maxwell’s theory were light waves. He’d have also maybe realized Maxwell theory is not correct, and only a classical statistical level theory, since photons are not waves. That’d count as “constructive” in my lexicon. Maybe tomaytoes vs tomartoes?

What he could not derive from known facts was how EM waves could propagate absent an aether, since there was no medium for the wave excitation. So he had to fudge it with the ‘Law of God’ that photons are massless. He was missing all the facts of course, as we still are, since no one knows what a photon is precisely, it is known only be some quantum numbers, (m=0, s=1, q=0).

OK, but later in that episode of his talks Stuckey gets around to saying this, so I was confused about what old Einstein meant by not being able to find a constructive account for length contraction and time dilation.

But wasn’t Einstein confused? Stuckey outlines the story the same way I just did. So it was constructive! Einstein used Maxwell theory. That gave an empirical constancy to the speed of light, and Michelson–Morley gave proof there was no aether medium (other than spacetime itself).

So I’d say this was highly constructive.

So, I just do not really know what Einstein meant.

The way Stuckey explains it is to point out that length contraction is “not causal”. Right, so that’s maybe what Einstein meant, that he could not find any causal process that physically invariantly (we could say covariantly) contracts an object’s dimensions. It is an artifact of measurements. The observers can always make the Lorentz transforms to obtain the coordinate independent quantities, which remain invariants of motion. In this case, the dimension of the object in Its own frame of rest.

Thus Einstein was right to settle on not bothering to find a “causal/constructive” account, since there was none! He was focusing on a measurement artifact (the coordinate length) not the physical invariants (dimension of the thing in Its own rest frame).

That’s really a gauge principle.

To be fair, In Albert’s day gauge principles were not really “a thing”, maybe Emmy Noether knew of the concept(?), but most did not, especially not the economists with regard to government currencies! (Which are also gauge.) The lesson modern physicists learned was to never use gauge quantities (like coordinates) as your founding principles, it’ll only lead to 𝖇𝖔𝖓𝖉𝖆𝖌𝖊 and 𝖕𝖆𝖎𝖓.

Good. Now the question is whether this story is the same or similar to the one we could tell for Planck’s constant ℏ. That’s Stuckey’s promise. The plot thickens.

Stern-Gerlach

In the second video Stuckey uses the Stern–Gerlach apparatus to introduce the first way in which the principle of frame-independence of ℏ is evident. It is a nice opportunity to gel with T4G too.

The idea is that since the Stern–Gerlach measurement of a fermion’s spin deflection is “quantum”, the spin eigenvalue being, s=±ℏ/4π. But this eigenvalue (or the other) will be recorded in all reference frames. This makes the experiment a universal determination of Planck’s constant.

Eerrr, well, I have not witnessed any such experiment with shifting S–G frames, but I’ll take it on faith this is the case.

The interesting thing is that classical magnetic moments (quasi-fermions) will not yield a S–G splitting, so the spin eigenvalues cannot be recorded. Why not?

It is because in the special arrangement of Stern–Gerlach, the deflection of the “little magnets” is random.

Right. But… the same is true for electrons! They go into the S–G magnetic field with random orientation, and are only split into two streams due to a polarizing effect. Their spin-z orientation is induced by the magnetic field. Most physics teachers do not know this! Most textbooks do not mention it! Yikes. Feynman re-rolls over in his grave again.

So how can we get a split into two discrete beams?

Well, shocker for the high school physics teacher who read only textbooks, we do not see a split into two discrete beams. There is always a fuzzy width. Nevertheless it is still non-classical, since electrons will get split into two basically separate bunches, the Sz “up” and the Sz “down.” (Alignment/anti-alignment with the polarizing magnetic field direction.)

The effect is still “quantum” not “classical”. The sensitivity to ℏ is the key thing, and the term which classical approximations ignore is what Dewdney et al (1988) coined the quantum torque.

It is not a scary term, it is identified even in plain vanilla Pauli theory:

It is the pseudo-vector S which contains the constant ℏ, and so is de facto dropped (ignored) in the classical treatment of a small magnetic dipole passing through an inhomogeneous magnetic field.

It is ignored classically because it is such a tiny effect. It is a strong enough torque only for polarizing extremely light mass particles.

In principle you could thus also see spin deflection classically, if you; (a) prepared identical magnetic moments, and (b) make them extremely light, or make the magnetic field ginormous (to the point of extremely hazardous).

But anyway, this does not change Stuckey’s main point, which is that if you do get a bifurcate S–G beam splitting, then you have universally measured the value ℏ.

He says,

“So we can attribute the property of spin angular momentum to the observer independence of ℏ.”

But is that right? I honestly could not follow the logic. Is it just far too obvious? Am I missing something? I had to take a break and a cuppa tea to think about it.

🫖🍵🍃

First of all, the quote above makes no sense to me. Spin angular momentum is nothing but a consequence of the symmetry of an elementary particle upon rotation. If it is a charged particle we can exploit that symmetry to measure the charge current (or equivalently its intrinsic magnetic moment) for a particle more-or-less at rest (maybe zipping across the lab at a thousand metres per second, but that’s almost at rest for our best clocks these days).

Thus, what spin measurements are telling us is that elementary particles have stable ground states, which they occupy almost all the time, we cannot excite them up to higher spin states. Experimentalists have only achieved higher spin states by combining elementary particles into clusters, which nature provides a fair few of Herself, called atoms.

Maybe this gets me closer to Stuckey’s real point?

The point would be that no one has (yet) ever boosted themselves to such speeds or accelerations to see any particles in higher spin states. All the experiments we’ve ever performed give the same spin eigenvalues, sz=±ℏ/4.

This does not give as quantum mechanics though, it does not imply superposition and entanglement.

Entanglement is a property of a two-state system, not a single spin. A particle can get “self-entangled” but only by virtue of existence of other nearby matter (the material in the slits in a Two Slit experiment, or the photons radiating out of the coils in the magnets of the Aharonov–Bohm effect, or the crystals in the solid in electron diffraction).

We can do slightly better though, a particle can also get “self-entangled” with the spacetime vacuum. Then last best, in T4G, we can have elementary particles (only those in the Standard Model) with internal wormhole bridges, as they almost must have if they are accounted for by 4D spacetime topology, since it’s not possible (so I believe) to get the full symmetries of the Standard Model — so including triality — without a doubled spacetime algebra Clifford frame. But then that’s the limit.

How does this square with what Stuckey is trying to say?

I could say that vacuum entanglement does naturally account for EPRB. What needs to occur is an initial interaction between the particles so the vacuum entanglement is transferred to the pair of Bell particles themselves. Engineers like Aspect, Clauser and Zeilinger know how to wrangle this, or their tech staff do, getting the Nobel Prize for their entanglement hacker abilities in this regard, but without any Swedish cash going to the tech staff. Shame on youz nerdz. However, I do not attribute this to a frame-independent ℏ.3

I see things as more like Einstein in relation to Maxwell’s Theory.

Why the hell is there an ℏ?

For Maxwell/Einstein, it was why the hell is there a

in the electromagnetic theory?

Saying, “It’s a Principle!” has no bite to me. It was almost all empirical, but had to be combined with the Michelson–Morely result of no aether (other than spacetime itself).

Note:

No aether other than spacetime itself — is what makes Maxwell’s theory a gauge theory. Electromagnetic theory gauges a phase symmetry for photon interactions, now just called the u(1) symmetry.

But u(1) gauge theory to me is not a principle, it’s an observation of a symmetry in nature (electric charge conservation, essentially). The principle is that the mathematics of the Lie algebra is a good description of electron–photon interactions. Anyway, that’s tomaytoes and tomartoes again. It doesn’t matter if we say it’s a constructive theory or a principle theory. It’s both.

I do not think Stuckey has come up with the equivalent yet. Why the hell is there an ℏ? is not explained.

As far as I can tell he thinks it is explained by the Principle of information invariance and continuity, and that this principle is equivalent to saying Planck’s constant is the same in all reference frames.

I think that’s the argument. Is it a good argument?

Information Invariance and Continutiy

About 4 minutes into his 4th episode, Stuckey attempts to prove QM follows from a Principle: Information Invariance and Continuity.

This is well known these days as the only non-classical postulate in QM — in the generalized probability framework (inherited from Lucien Hardy’s original axiomatization of QM).

It does not fly with me though, because it does not explain the elementary particle symmetries. Thus what Stuckey has (and Hardy, and the rest of the GPT crowd) is a general framework for the Measurement Theory of QM. It is not truly ℚ𝕦𝕒𝕟𝕥𝕦𝕞 ℙ𝕙𝕪𝕤𝕚𝕔𝕤 writ large.

Specifically, GPT’s show that QM permits continuous transitions between ‘states’ (meaning the spinors). But that is a model property, it is not a physics principle. It is equivalent to allowing superpositions (that’s how the continuous transitions are realized in our models).

But there is no reason for superpositions to be real. They are probably fictional, and they are accounting tools. They account for the indivisible stochastic dynamics (ISQM). ISQM is also a statistical account, not a causal account. So there is an explanatory gap, which neither Stuckey nor Barandes manages to close. Why those sorts of statistics? (The statistics of indivisible non-Markov transitions or equivalently superpositions and entanglements as derived from the postulate of information invariance and continuity).

My opinion is that this will not do! Call the schoolmaster!…

Anyway, the concept is that the EPR measurements have a symmetry.

The situation is such:

Suppose Alice ⭐’s some of her spin=↑ results, because she was feeling jolly at the time.

Although there should be perfect Alice↔Bob symmetry, if you look at things from Alice’s starred (⭐) perspective she might say, “Look, I got 100% spin=↑ on these.” Bob says, I got for those you noted down 33% spin=↑ and 67% spin=↓.

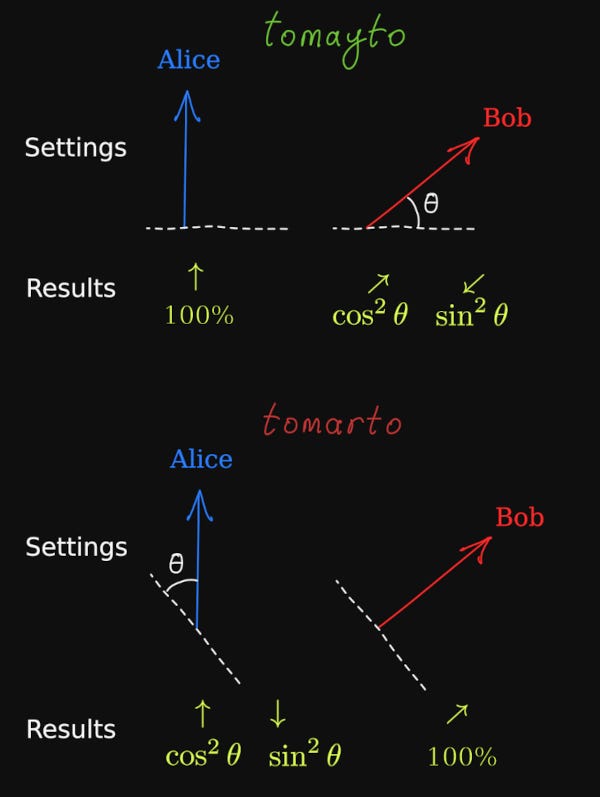

Picture: you see the issue right? Can I be any clearer? Both these cases are exactly the same. The only thing we shifted was in our heads, which reference line to take as defining the angle θ: Alice’s (top) or Bob’s (below). The angle is a fiction, it is gauge.

But then you examine Bob’s data, and he says, “On these I got 100% spin=↑.” Chucking away her starred results Alice says, “Oh yeah, I got 66% spin=↑ and 34% spin=↓ on those.”

In each case, by “on those” they mean certain coincidence counts, which ensure they’re likely the prepared Bell pairs, not cosmic rays or noise.

Stuckey’s idea, which is one I’ve written about many times before, is that Nature does not care whose perspective is “starred”. The evil of gold ⭐ rewards don’t you know?

Thus, there being no preferred frame of reference, Nature must play fair, and both these correlations are correct.

There is no contradiction since these are inspecting completely (mostly) different subsets of the coincidence count data. It is only ever from the statistics that we ever get a hint that the EPR particles were entangled. A single measurement can tell us nothing (about entanglement).

Like in Einstein’s case, there is a causal–empirical account available, for Einstein it was spacetime plus u(1) symmetry for photons and photon–electron interaction. I think there is also a spacetime account available for information invariance and continuity, which Stuckey is missing. (Topological invariants.)

I agree Stuckey’s idea is a good principle for foundations of QM. But I also think there is a deeper underpinning reason for the principle holding.

Maybe it’s my tomayto and tomarto again? But I think we should demand more than a metaphysical, “it’s just weird statistics and correlations!” (non)explanation.

Unlike Stuckey, T4G theory has a beautiful (and also spacetime) explanation for the EPR correlations. Nevertheless, Stuckey is quite right, T4G conforms with his more metaphysical principle of invariance of information and continuity. He just does not have the analogue of Maxwell’s Theory to account for it the way Einstein had for SR and GR. Stuckey only has his Michelson & Morely (the EPRB experimentalists).

Stuckey is like an Einstein without his Maxwell. He has a constancy of ℏ, but has no clue about why.

Contrary to Stuckey, I would not say SR and GR are Principle Theories. Principles are part of GR for sure, the gauge principles of coordinate remapping invariance, but these are based on a solid “unprovable” metaphysical foundation that 4D pseudo-Riemannian spacetime is a real “thing”. I think Stuckey should be delighted if he ever learns about T4G theory, because we have his James Clerk Maxwell ready (you could say it is Juan Maldecana if you like), we know where ℏ comes from (the wormhole oscillations).

I file this all under the,

“Probabilistic and statistical explanations are not proper explanations,” cabinet folder of quantum curiosities.

The NPRF + ℏ + c Worldview

NPRF = No Preferred Reference Frame.

NPRF + c yields special and general relativity, while NPRF + ℏ yields (part of) QM.

With this, Stuckey offers a worldview or ontology for resolving the Measurement Problem.

He claims macroscopic objects provide a classical world, and we live in a Quantum–Classical Contextual Universe. He reckons “Bodily objects” (which have definite position and momentum) emit and absorb “quanta” (which do not have simultaneous values for incommensurate observable), and the two types are not the same.

Stuckey: “_Bodily objects and Quanta are co-fundamental.”

Too bizarre for my taste, it ain’t proper physics. I think it is balmy, but a nice try. I do not mind a bit of Dualism when it is appropriate (clearly distinct Types, as in Mind/Body) but bodily objects are comprised of quanta, in my humble view, and do not magically change Type just because they get bound together, so this ontology does not fly with me.

A Recovery

I think I can give Stuckey almost what he wants though. You do not need to postulate an extra ontological Type of “Bodily Matter”. Just use T4G entanglement. Entanglement is (as Stuckey notes) why things behave quantum mechanically. It is somewhat telling that his worldview is very Copenhagen-like (a type of unjustified dualism) — it is a sign that he does not really know what entanglement really is, in essence.

He is going more for the Emitter–Absorber theory, with a twist.

I am saying there is no need to do that. Bulk matter already ruins quantum behaviour because the entanglement gets uncontrollable. There is no coherence in bulk matter, nor in highly dense highly energetic matter, and so superpositions simply cannot be come statistically effective. But since superpositions are only statistical, that means the model of time evolution described by QM breaks down, it becomes inapplicable.

Note that quantum physics itself never breaks down, it is always applicable, there is always entanglement, at least with the vacuum, what breaks down is the orthodox model we have for the physics.

That’s where I’ll end it for today.

Just a cryptic little joke, you can figure it out by observing yourself when eating spaghetti, or rather, take the point-of-view of the noodle.

I quite enjoy writing in real-time. Potential for getting egg-in the face goes well with spaghetti and tomatoes.

That segue made me giggle, so I left it there.

I appreciate the clarity of your article and the care you take in distinguishing what counts as “physical.” I agree with you that information, by itself, isn’t a physical substance in the way mass or charge is.

But it does have physical consequences because it carries the structural imprint of the energetic event that produced it. When that structure reaches a boundary, it determines how energy is released or absorbed locally. So even if information isn’t physical in itself, its effects are and they depend entirely on boundary conditions.

You’ve made the point that information isn’t physical and I agree with part of that statement but only part.

If by “information” we mean meaning, then yes, meaning is something an observer/boundary assigns. A magnetic field doesn’t “mean” anything by itself. The meaning is arrived at the boundary through interaction/ instruction.

But the field boundary is still there.

This is the same distinction Shannon drew decades ago:

Information = structure (I prefer instruction)

Meaning = interpretation

If we mix the two up, we end up dismissing half of physics. From this perspective, meaning isn’t real without an observer boundary. But the information as instruction via pattern structure, absolutely is and at that point the real issue becomes:

Where do the constants that govern those structures come from?

On that topic, I think Stuckey is elegant but incomplete. Saying ℏ is frame-independent is important, but it doesn’t explain why it exists or why it has the value it does. That’s the bit you were asking for, the Maxwell to go with Einstein’s principle, so to speak. There is a constructive explanation available.

You can derive c, ℏ, and α from the geometry of a closed recursive field system:

c comes from the universal update rate of projection and is the speed at which the field refreshes.

ℏ comes from the smallest stable twist in the recursion and is the quantum of action enforced by geometric torsion.

α arises from how the electric and magnetic projection channels scale relative to each other.

Those are physical mechanisms. Not metaphors, and not pure principles.

Stuckey gives a kinematic symmetry. A deeper geometric account explains why that symmetry holds. So we can keep his result (the frame-independence of ℏ), while giving it the thing you’ve been asking for:

a physical, constructive reason for why the constant is there in the first place.

The vector provides the instruction and the boundary determines the consequence. That's not abstract. ⛹🏻♂️